Editorial Disclaimer

This content is published for general information and editorial purposes only. It does not constitute financial, investment, or legal advice, nor should it be relied upon as such. Any mention of companies, platforms, or services does not imply endorsement or recommendation. We are not affiliated with, nor do we accept responsibility for, any third-party entities referenced. Financial markets and company circumstances can change rapidly. Readers should perform their own independent research and seek professional advice before making any financial or investment decisions.

The software industry has long struggled with the challenge of software estimation. With numerous differing opinions on the best methods for estimating software development projects, there is no clear consensus.

This post explores why software estimates frequently fall short and offers guidance on how organisations can leverage them for better-informed decision-making during project planning. These strategies are also applicable to eCommerce, aligning with lean eCommerce brand practices. This article emphasises the importance of estimation before initiating a project.

Software development estimation should be an impartial analytical process. Project estimation determines the effort and time needed to finish a project or task. This information is crucial for making informed decisions about the project's direction.

Teams should see software estimates as analytical judgments, not quotes or negotiation tools, and avoid using them as targets, commitments, or goals to achieve. A common executive mistake is to treat software information as estimates as tender offers and choose the smallest one, which is different from their intended purpose.

Most predictions are inaccurate, and this issue is not neutral. Industry statistics indicate that we need to consider the software development sector.

Choosing a partner for a software development project based solely on the smallest estimate leads to decisions based on incorrect information. Before a project begins, many options exist, such as making trade-offs over features or cancelling the project if the most realistic estimate is unacceptable. However, if poor forecasting has already consumed the budget, it will be too late to make these crucial decisions.

Over decades, researchers have extensively studied software development. Let's review these studies:

In 1992, Albert L. Lederer and Jayesh Prasad published a seminal work, highlighting that approximately two-thirds of all projects significantly exceed their estimates. This work offers nine management guidelines to improve cost-estimating practices.

These guidelines, drawn from industry best practices and outlined in various project management frameworks, emphasise the need for detailed scope definition. Clear project scope helps identify all necessary tasks and resources upfront, minimising the risk of unforeseen costs later. Another critical guideline is the importance of historical data analysis—leveraging past project costs and performance metrics to inform current estimates can significantly enhance accuracy.

Additionally, the guidelines stress the involvement of all stakeholders in the estimation process to ensure alignment and buy-in, thereby reducing the likelihood of misunderstandings or resistance during project execution.

Since 1994, The Standish Group has annually published reports on the success and performance of software engineering projects. The initial study sparked controversy in software engineering circles: only 16% of software projects were completed on time and within budget.

Over half of the projects underestimated their scope; nearly one-third failed and were abandoned. On average, cost estimates exceeded by approximately 100% and project schedules by 120%. These figures likely understate the accuracy issues, as projects often need to cut significant functionality to meet deadlines and budgets.

The CHAOS Report 2015 revealed that 30% of projects were successful and 20% ultimately failed. Astonishingly, 70% of all software projects fail to meet expectations. The report also highlighted that only 11% of Waterfall projects were successful, with a failure rate of 29%, closely mirroring findings from the 1994 report. In contrast, Agile projects boasted a 40% success rate and a failure rate of 9%. This stark contrast underscores the effectiveness of software development processes that do not rely heavily on upfront project estimation.

Both reports emphasise the critical role of user and executive management engagement. While a clear work breakdown structure was identified as crucial in 1994, its significance diminished by 2015. Software developers and project managers have responded to these challenges by adopting Agile methodologies, which enable them to navigate uncertain and evolving project requirements more effectively.

Steve McConnell's 2006 software estimation book, Software Estimation: Demystifying the Black Art, provides compelling insights based on data from a single company, Construx Software, where McConnell was the CEO.

According to a 2016 analysis by Deloitte, software development projects frequently face substantial challenges. The study found that these projects typically experience a 70% cost overrun, a 40% delay in delivery, and a 15% decrease in expected returns. The total cost overruns for all IT projects examined amounted to $72 billion, surpassing Slovenia's GDP.

Following the failure of the Virtual Case File (VCF) system, the FBI launched the Sentinel project to create a modern case management system.

Systematic Pitfalls:

The Sentinel project faced multiple delays and cost overruns, with initial estimates of $425 million ballooning to over $600 million. The project highlighted the difficulties in accurately estimating costs and timelines for complex, evolving projects.

The National Programme for IT was an ambitious project by the UK National Health Service (NHS) to create an integrated electronic health records system across England.

Systematic Pitfalls:

Initially estimated to cost £2.3 billion, the program ended up costing over £12 billion before being dismantled in 2011. Many components were either not delivered or not used, reflecting the difficulties in systematic estimation.

Determining what constitutes a reasonable estimate amidst frequent inaccuracies and unavoidable sources of error is crucial. A practical estimate should be honest, accurate, and precise enough to facilitate decision-making.

An estimate must align with an executive's preconceived notion rather than being honest, or else it becomes pointless and detrimental. Such estimates do not support informed decision-making, but rather perpetuate misconceptions.

The validity of a decision hinges on the accuracy of the estimate, where even minor errors can lead to significant repercussions. The primary goal is to decide whether to initiate a project confidently. Moreover, a reliable estimate can aid in identifying which project features are essential for achieving financial objectives. However, it's important to recognise that initial estimates should not dictate project actions, as requirements often evolve.

An estimate that needs more precision or offers a broad range of possibilities due to high uncertainty is equally effective for decision-making. Executives need assurance that the forecast provides sufficient detail and reliability.

But how can executives determine if an estimate meets these criteria?

While businesses cannot predict the future, they rely on honest predictions to make informed decisions. A trustworthy estimator prioritises accuracy over convenience, advocating adjusting project scope to align with budget constraints rather than compromising the required hours to complete features.

McConnell introduces a straightforward test in his book to gauge software estimation proficiency. Participants must provide upper and lower bounds encompassing the correct value with a 90% confidence level. This exercise assesses forecasting abilities rather than research skills, and the average score among test-takers is 2.8 correct answers. Remarkably, only 2% of participants achieve eight or more correct answers, highlighting a common overestimation of confidence levels.

Instead of relying on improved guessing skills, McConnell advocates minimising uncertainty through more systematic approaches. For instance, estimating effort based on straightforward calculations rather than guesswork can significantly enhance accuracy:

Effort Estimation=NumberOfRequirements×AverageEffortPerRequirement

However, some tasks may deviate significantly from the average, necessitating a more nuanced approach. In such cases, employing a PERT formula, which combines optimistic, most likely, and pessimistic estimates, can provide a balanced perspective:

PERT=6 OptimisticEffort+4×MostLikelyEffort+PessimisticEffort

These methods underscore the importance of data-driven estimation practices over subjective guesswork, ensuring more reliable project planning and execution.

Understanding that software development estimation involves a spectrum of possibilities rather than a single figure is crucial. When employing techniques like PERT, it's essential to establish the range using standard deviation. However, achieving this precision requires historical error data from comparable projects to calibrate this deviation, adding complexity to the software development estimation process.

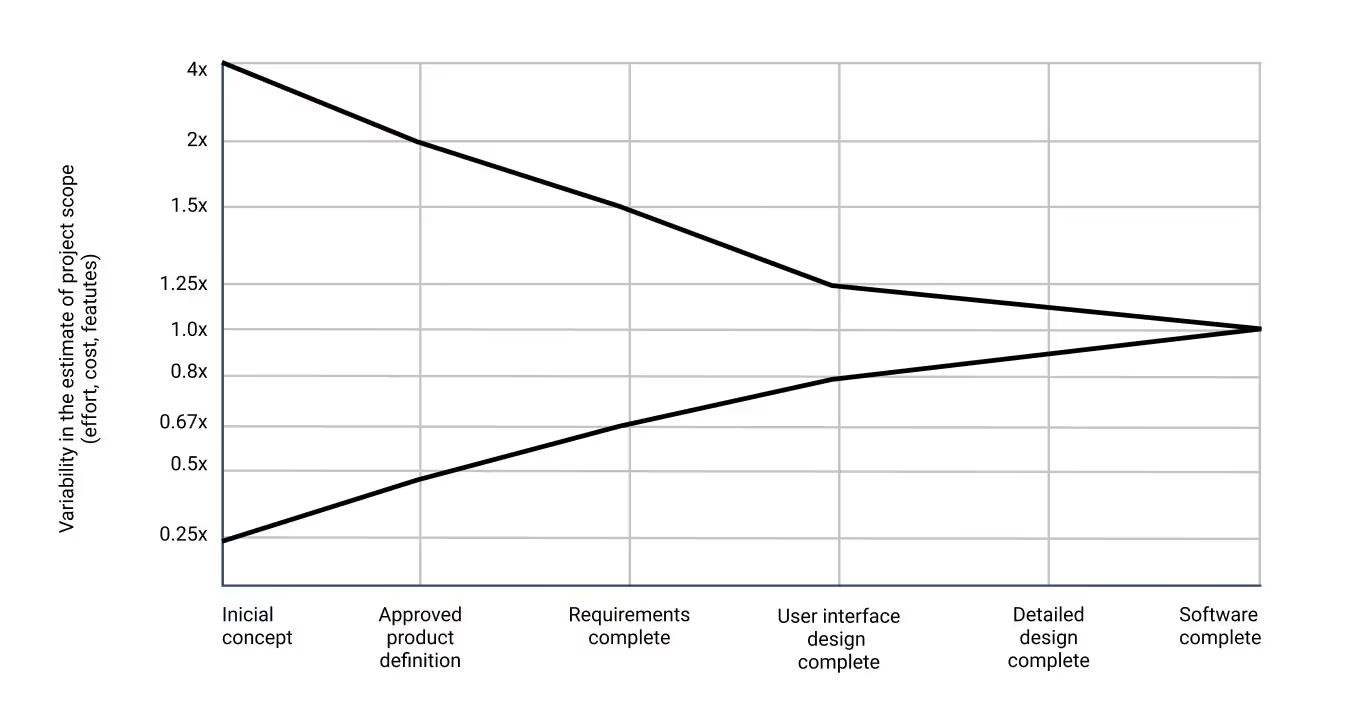

It's essential to recognise that the Cone of Uncertainty illustrates the maximum likely accuracy of estimation practices across different project phases. The cone graph visualises the margin of error in estimates made by experienced estimators, with the understanding that outcomes can often be less favourable. Achieving greater accuracy than depicted in the cone is aspirational rather than guaranteed.

Another crucial aspect is that an estimate may only improve if project management is adequate or estimators need more expertise. When a project fails to focus on reducing uncertainty, it transforms from a Cone into a lingering Cloud that persists until project completion.

What does this mean for executives reviewing estimates? Teams often provide estimates before fully defining requirements, as these requirements can evolve throughout the project lifecycle. According to the Cone of Uncertainty, the best achievable precision ranges from 0.67 times to 1.5 times the initial estimate during this early project stage. Therefore, if the upper bound is at least 2.24 times greater than the lower bound, the forecast may need to be accurate and reliable enough.

How do we approach defining requirements when we anticipate changes throughout the project? Agile ecommerce development introduces a powerful technique called the user story. Unlike formal requirements, a user story is a commitment to future communication.

Examples include "A user can add a product to their cart" or "A user can complete a payment for an order."

The "INVEST" acronym guides the creation of compelling user stories:

These principles foster agility by enabling flexible and iterative development processes that adapt to evolving requirements throughout the project lifecycle.

Here are some prevalent mistakes that frequently lead to underestimating software projects:

By addressing these pitfalls early in the project lifecycle, teams can improve the accuracy of their software project estimates and mitigate potential challenges later on.

It is crucial to recognise that an estimate is an analytical tool, not a commitment or quotation. Project estimation should guide decision-making rather than serve as a competitive bidding tool.

Here are some recommendations to enhance the utility of business estimates:

Use these as a practical guide for executives navigating project assessments.

Author Alex Borodin, COO at VT Labs

The software industry has long struggled with the challenge of software estimation. With numerous differing opinions on the best methods for estimating software development projects, there is no clear consensus.

This post explores why software estimates frequently fall short and offers guidance on how organisations can leverage them for better-informed decision-making during project planning. These strategies are also applicable to eCommerce, aligning with lean eCommerce brand practices. This article emphasises the importance of estimation before initiating a project.